PDF version

Heteroskedasticity-robust standard errors

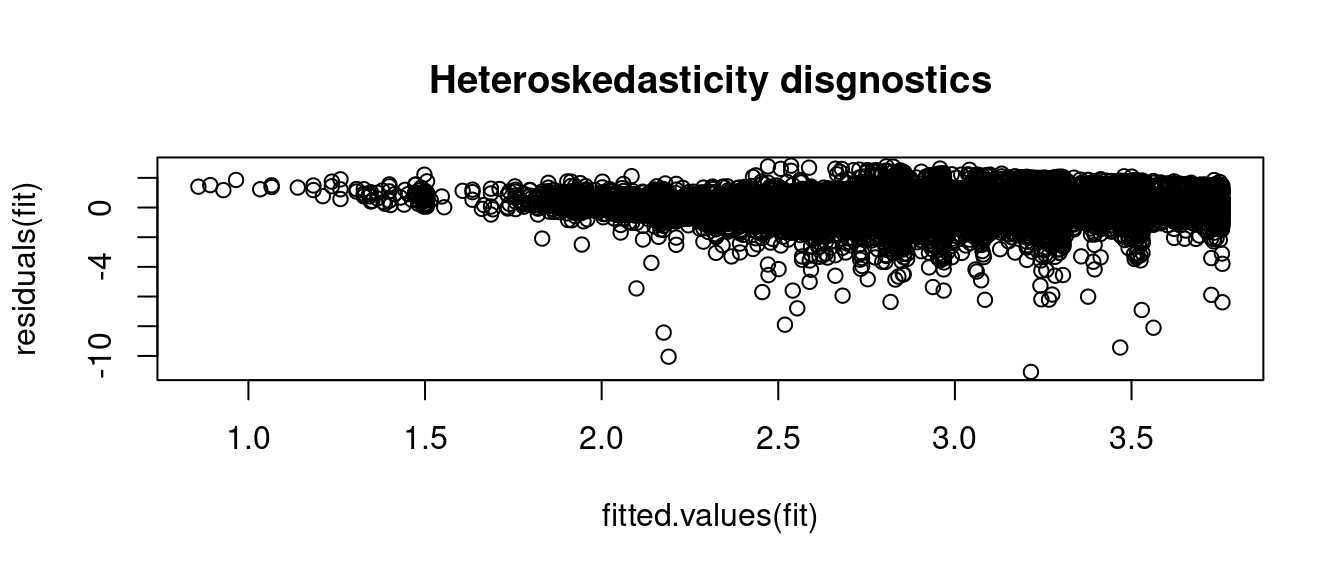

In the previous section, we discussed that exact inferential methods can be derived under homoskedasticity (A5) and normality (A6). These assumptions are quite restrictive for most practical applications. Let us now focus on the heteroskedastic regression model.

OLS Assumptions for the Heteroskedastic Model

The variables Y_i and X_i = (1, X_{i2}, \ldots, X_{ik})' satisfy the linear regression equation

Y_i = X_i' \beta + u_i, \quad i=1, \ldots, n,

which has the matrix representation \boldsymbol Y = \boldsymbol X \beta + \boldsymbol u.

For each i=1, \ldots, n we assume

(A1) conditional mean independence: E[u_i \mid X_i] = 0

(A2) random sampling: (Y_i, X_i') are i.i.d. draws from their joint population distribution

(A3) large outliers unlikely: 0 < E[Y_i^4] < \infty, 0 < E[X_{ij}^4] < \infty for all j=1, \ldots, k

(A4) no perfect multicollinearity: \boldsymbol X has full column rank

Recall that the conditional covariance matrix is \begin{align*}

Var[\widehat \beta \mid \boldsymbol X]

&= (\boldsymbol X'\boldsymbol X)^{-1} \boldsymbol X' \boldsymbol D \boldsymbol X (\boldsymbol X'\boldsymbol X)^{-1} \\

&= \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1} \bigg( \sum_{i=1}^n \sigma^2_i X_i X_i' \bigg) \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1}.

\end{align*} The standard deviation of the j-th coefficient estimate is

sd(\widehat \beta_j \mid \boldsymbol X) = \sqrt{\Bigg[\bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1} \bigg( \sum_{i=1}^n \sigma^2_i X_i X_i' \bigg) \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1}\Bigg]_{jj}}

It is not possible to standardize \widehat \beta_j using sd(\widehat \beta_j \mid \boldsymbol X) in practice because the conditional variances \sigma_i^2 = Var[u_i \mid X_i] are unknown.

Estimating \sigma_i^2 consistently for every i = 1, \ldots, n separately is impossible. However, it turns out that that the weighed average n^{-1} \sum_{i=1}^n \sigma^2_i X_i X_i' can be estimated consistently if we use the squared residuals \widehat u_i^2 as a proxy for the conditional variances \sigma_i^2:

\frac{1}{n} \sum_{i=1}^n \widehat u^2_i X_i X_i' \overset{p}{\longrightarrow} \frac{1}{n} \sum_{i=1}^n \sigma^2_i X_i X_i'

Therefore, a consistent covariance matrix estimator under heteroskedasticity is

\widehat{Var}_{\tiny HC0}[\widehat \beta] = \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1} \bigg( \sum_{i=1}^n \widehat u^2_i X_i X_i' \bigg) \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1},

and the corresponding standard error for the j-th coefficient estimate is

se_{\tiny HC0}(\widehat \beta_j) = \sqrt{\Bigg[\bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1} \bigg( \sum_{i=1}^n \widehat u^2_i X_i X_i' \bigg) \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1}\Bigg]_{jj}}.

It satisfies se_{\tiny HC0}(\widehat \beta_j)/sd(\widehat \beta_j \mid \boldsymbol X) \overset{p}{\to}1, and, together with the asymptotic normality result in Equation 10.4, we obtain

Z_{j,\tiny HC0} = \frac{\widehat \beta_j - \beta_j}{se_{\tiny HC0}(\widehat \beta_j)} \overset{D}{\longrightarrow} \mathcal N(0,1),

which is the heteroskedasticity-robust t-ratio.

Unfortunately, it is not possible to derive the exact distribution of Z_{j,\tiny HC0} even if (A6) is true or if (A5) and (A6) is true. However, the large sample approximation of Z_{j,\tiny HC0} by a standard normal distribution is typically quite accurate, even for small samples.

We use the label HC0 for this version of heteroskedasticity-robust standard errors. There are many other options (HC1–HC5). HC1 is the version that is implemented as the default standard error in many software packages (e.g., the “r” option in Stata), and is a bias-corrected version of HC0:

se_{\tiny HC1}(\widehat \beta_j) = \sqrt{\frac{n}{n-k}} \cdot se_{\tiny HC0}(\widehat \beta_j).

Another version is HC3, which is based on leave-one-out residuals:

se_{\tiny HC3}(\widehat \beta_j) = \sqrt{\Bigg[\bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1} \bigg( \sum_{i=1}^n \Big(\frac{\widehat u_i}{1-h_{ii}}\Big)^2 X_i X_i' \bigg) \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1}\Bigg]_{jj}},

where h_{ii} is the i-th leverage value. The idea is similar to the leave-one-out R-squared, where influential observations get much higher weights for standardization to account for their impact on the coefficient estimate. The corresponding full covariance matrix estimate is

\widehat{Var}_{\tiny HC3}[\widehat \beta] = \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1} \bigg( \sum_{i=1}^n \Big(\frac{\widehat u_i}{1-h_{ii}}\Big)^2 X_i X_i' \bigg) \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1}.

Recent research indicates that HC3 should be the preferred choice for conducting inference, but other HC-versions perform similarly well. Classical standard errors

se(\widehat \beta_j) = \sqrt{s^2 E[(\boldsymbol X' \boldsymbol X)^{-1}]_{jj}} = \sqrt{\bigg(\frac{1}{n-k} \sum_{i=1}^n \widehat u_i^2 \bigg) \bigg[ \Big( \sum_{k=1}^n X_k X_k' \Big)^{-1} \bigg]_{jj}}

should only be used if you have very good reasons that your error terms are homoskedastic.

| (Intercept) |

0.0164572 |

0.0181872 |

0.0181879 |

0.0181919 |

| education |

0.0009752 |

0.0010870 |

0.0010870 |

0.0010872 |

| experience |

0.0008074 |

0.0008851 |

0.0008851 |

0.0008856 |

| I(experience^2) |

0.0000167 |

0.0000194 |

0.0000194 |

0.0000194 |

The HC-robust standard errors are all quite similar. The classical standard errors differ from the robust obes and should not be used since homoskedasticity might not hold. In practice, there is no good reason not to use HC standard errors, even if there is evidence for homoskedasticity.

Robust confidence intervals

A heteroskedasticity-robust asymptotic 1-\alpha confidence interval for \beta_j is

I_{1-\alpha}^{\tiny HC} = \big[\widehat \beta_j - z_{(1-\frac{\alpha}{2})} se_{\tiny HC}(\widehat \beta_j); \widehat \beta_j + z_{(1-\frac{\alpha}{2})} se_{\tiny HC}(\widehat \beta_j)\big],

where se_{\tiny HC}(\widehat \beta_j) is any heteroskedasticity-robust standard error.

Instead of z_{(1-\frac{\alpha}{2})} you can also use t_{(n-k; 1-\frac{\alpha}{2})} since \lim_{n \to \infty} t_{(n-k; 1-\frac{\alpha}{2})} = z_{(1-\frac{\alpha}{2})}. However, there is no theoretical justification for preferring t-quantiles over standard normal quantiles.

For our CPSdata regression, we obtain the following robust confidence intervals:

[,1] [,2]

(Intercept) 0.9015028254 0.9728138849

education 0.1104457789 0.1147075373

experience 0.0344968746 0.0379682407

I(experience^2) -0.0006151078 -0.0005391928

Classical confidence intervals are slightly too small:

2.5 % 97.5 %

(Intercept) 0.9049020666 0.9694146436

education 0.1106652300 0.1144880862

experience 0.0346500248 0.0378150905

I(experience^2) -0.0006098851 -0.0005444154

Robust t-tests

The robust version of the two-sided t-test with

H_0: \beta_j = \beta_j^0 \quad vs. \quad H_1: \beta_j \neq \beta_j^0

has the test statistic

T_0^{\tiny HC} = \frac{\widehat \beta_j - \beta_j^0}{se_{\tiny HC}(\widehat \beta_j)}.

The test decision rule is \begin{align*}

\text{do not reject} \ H_0 \quad \text{if} \ &|T_0^{\tiny HC}| \leq z_{(1-\frac{\alpha}{2})}, \\

\text{reject} \ H_0 \quad \text{if} \ &|T_0^{\tiny HC}| > z_{(1-\frac{\alpha}{2})}.

\end{align*}

The null distribution CDF is F_{0}=\Phi, and the p-value for the two-sided t-test is

p\text{-value} = 2(1-\Phi(|T_0^{\tiny HC}|)).

Regression outputs with robust standard errors can be created with the following command:

z test of coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 9.3716e-01 1.8192e-02 51.515 < 2.2e-16 ***

education 1.1258e-01 1.0872e-03 103.547 < 2.2e-16 ***

experience 3.6233e-02 8.8557e-04 40.914 < 2.2e-16 ***

I(experience^2) -5.7715e-04 1.9366e-05 -29.802 < 2.2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

You can also include robust standard errors in stargazer outputs:

|

|

|

|

Dependent variable:

|

|

|

|

|

|

log(wage)

|

|

|

|

education

|

0.113***

|

|

|

(0.001)

|

|

|

|

|

experience

|

0.036***

|

|

|

(0.001)

|

|

|

|

|

I(experience2)

|

-0.001***

|

|

|

(0.00002)

|

|

|

|

|

Constant

|

0.937***

|

|

|

(0.018)

|

|

|

|

|

|

|

Observations

|

50,742

|

|

R2

|

0.237

|

|

Adjusted R2

|

0.237

|

|

Residual Std. Error

|

0.590 (df = 50738)

|

|

|

|

Note:

|

p<0.1; p<0.05; p<0.01

|

Robust F-tests

For a robust F-test of the form

H_0: \boldsymbol R' \beta = \boldsymbol c, \quad vs. \quad H_1: \boldsymbol R' \beta \neq \boldsymbol c,

the robust F-statistic

F_0 = \frac{1}{q} (\boldsymbol R' \widehat \beta - \boldsymbol c)' (\boldsymbol R' (\widehat{Var}_{\tiny HC3}[\widehat \beta]) \boldsymbol R)^{-1} (\boldsymbol R' \widehat \beta - \boldsymbol c)

can be used.

The asymptotic critical value is the 1-\alpha quantile of the F_{q,\infty} distribution. Note that the F_{q,\infty} distribution coincides with \chi^2_q/q, so you can use the 1-\alpha quantile of \chi^2_q divided by q.

If c is the 1-\alpha critical value, the asymptotic size-\alpha F-test has the following decision rule: \begin{align*}

\text{do not reject} \ H_0 \quad \text{if} \ &F_0 \leq c, \\

\text{reject} \ H_0 \quad \text{if} \ &F_0 > c.

\end{align*}

Autocorrelation-robust standard errors

OLS Assumptions for the Dynamic Regression Model

The variables Y_i and X_i = (1, X_{i2}, \ldots, X_{ik})' satisfy the linear regression equation

Y_i = X_i' \beta + u_i, \quad i=1, \ldots, n,

which has the matrix representation \boldsymbol Y = \boldsymbol X \beta + \boldsymbol u.

For each i=1, \ldots, n we assume

(A1) conditional mean independence: E[u_i \mid X_i] = 0

(A2b) weak dependence: (Y_i, X_i') is stationary short-memory, and (Y_i, X_i') and (Y_{i-\tau}, X_{i-\tau}') become independent as \tau gets large

(A3) large outliers unlikely: 0 < E[Y_i^4] < \infty, 0 < E[X_{ij}^4] < \infty for all j=1, \ldots, k

(A4) no perfect multicollinearity: \boldsymbol X has full column rank

Since the observations are not independent by (A2b), the covariance matrix Var[\widehat \beta \mid \boldsymbol X] has a more complicated structure.

Similarly, to the long-run variance in the sample mean case, the term \sum_{i=1}^n \sigma_i^2 X_i X_i' must be replaced by its long-run counterpart. It turns out that the following matrix is a consistent estimate for Var[\widehat \beta \mid \boldsymbol X] for dynamic linear regressions:

\widehat{Var}_{\tiny HAC}[\widehat \beta] = \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1} \boldsymbol V_{\tiny HAC} \bigg(\sum_{i=1}^n X_i X_i'\bigg)^{-1}

where

\boldsymbol V_{\tiny HAC} = \sum_{i=1}^n \widehat u^2_i X_i X_i' + \sum_{\tau=1}^{\ell_n-1} \frac{\ell_n-\tau}{\ell_n} \widehat u_i \widehat u_{i-\tau} \big( X_i X_{i-\tau}' + X_{i-\tau} X_i' \big),

and \ell_n is some suitable truncation parameter. A common rule of thumb is to select \ell_n = \lfloor 0.75 \cdot n^{1/3} \rfloor

We use the label HAC because the covariance matrix provides heteroskedasticity and autocorrelation-robust standard errors, which are given by

se_{\tiny HAC}(\widehat \beta_j) = \sqrt{\big[\widehat{Var}_{\tiny HAC}[\widehat \beta]\big]_{jj}}.

For linear regressions with time series data you should use se_{\tiny HAC}(\widehat \beta_j) for confidence intervals and t-tests, and you can use \widehat{Var}_{\tiny HAC}[\widehat \beta] for F-tests.

For a given linear regression model object lmobj, the NeweyWest(lmobj) command from the sandwich package returns the HAC covariance matrix estimate.

Additional reading

- Stock and Watson (2019), Sections 5, 7, 18, 19

- Hansen (2022b), Sections 7

- Davidson and MacKinnon (2004), Section 4, 5, 6